|

EXPLAINABLE AI IN DIGITAL HEALTH |

“Explainable AI working along with human professionals can deliver new levels of medical care”Antonis C. Kakas is a Professor at the Department of Computer Science of the University of Cyprus. He studied Mathematics at Imperial College, London and then obtained his Ph.D. in Theoretical Physics from the same college in 1984. His interest in Computing and AI started in 1989 under the group of Professor Kowalski. Since then, his research has concentrated on Computational Logic in AI with particular interest in Argumentation, Abductive and inductive Reasoning and their application to Machine Learning and Cognitive Systems. He has published over 100 papers with his work widely cited. He has co-developed at the UCY several research AI systems, including the argumentation system of GORGIAS which has been applied to a wide range of problems in multi-agent systems and cognitive agents, particularly in the area of digital health. With others he has proposed, Argumentation Logic, as a logic that offers a new perspective for logic-based Explainable AI. Currently, he is working on the development of a new framework of Cognitive Programming that aims to offer an environment for developing Human-centric AI systems that can be naturally used by developers and human users at large. With his students and collaborators, he is developing two new systems, COGNICA for Cognitive Argumentation and ArgEML for Explainable Machine Learning, to support the framework of Cognitive Programming and its application to medical decision support. He has served as the National Contact Point for Cyprus in the flagship EU project on AI, AI4EU. Professor (Emeritus) Antonis C. Kakas Department of Computer Science University of Cyprus antonis-AT-ucy.ac.cy; (+357) 22 892706 |

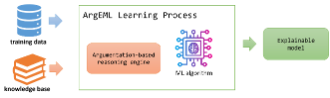

Argumentation-based Explainable Machine Learning (ArgEML)We are developing a framework for explainable machine learning that uses argumentation as the target language and notion of prediction for learning. This framework, called ArgEML, integrates sub-symbolic with logical methods of symbolic AI argumentation to provide explainable solutions to learning problems. Predictions are accompanied with detailed explanations to enable the human professional to take more informed decisions. Furthermore, this natural explainability element of the argumentation-based approach can help us structure and partition the learning problem space into meaningful subspaces.

The approach has been applied on several real-life medical problems, such as for the Asymptomatic Carotid Stenosis and Risk of Stroke prediction, the Endometrial Cancer Detection and Intracranial aneurysm rupture risk prediction. |

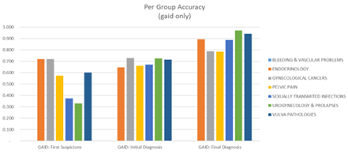

Human-centric AI (HCAI) for Medical SupportHuman-centric artificial intelligence (HCAI) aims to provide support systems that can act as peer companions to an expert in a specific domain, by simulating their way of thinking and decision-making in solving real-life problems. We are developing a methodology for building such systems in the medical domain. The gynaecological artificial intelligence diagnostics (GAID) assistant is such a system.

Based on artificial intelligence (AI) argumentation technology, it is developed to incorporate, as much as possible, a complete representation of the medical knowledge in gynaecology and to become a real-life tool that will practically enhance the quality of healthcare services and reduce stress for the clinician. The system provides explanations supporting a diagnosis against other possible diseases, allowing for a learning process of modular improvement of the system through feedback on the diagnostic discrepancies between the system and a specialist. GAID has been tested on real-life examples of known past clinical visits with reasonable levels of accuracy in comparison with a senior doctor.

|

|

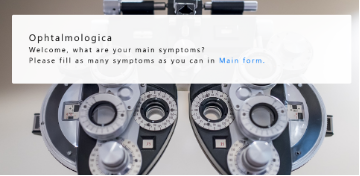

The Ophthalmological system is an Explainable AI triage system whose aim is to decide on the possible severity of a patient’s eye symptoms so that the patient can be given the appropriate priority of seeing the doctor. The system takes as input the initial symptoms selected by the patient or a nurse and then depending on their completeness with respect to making a good prediction of the possible disease, the system asks for further relevant information from the patient.

The output of the system is a suggestion of possible diseases accompanied with an explanation of why each of these is possible. |

|

|

SELECTED GRANTS

|

SELECTED PUBLICATIONS

|